Overview

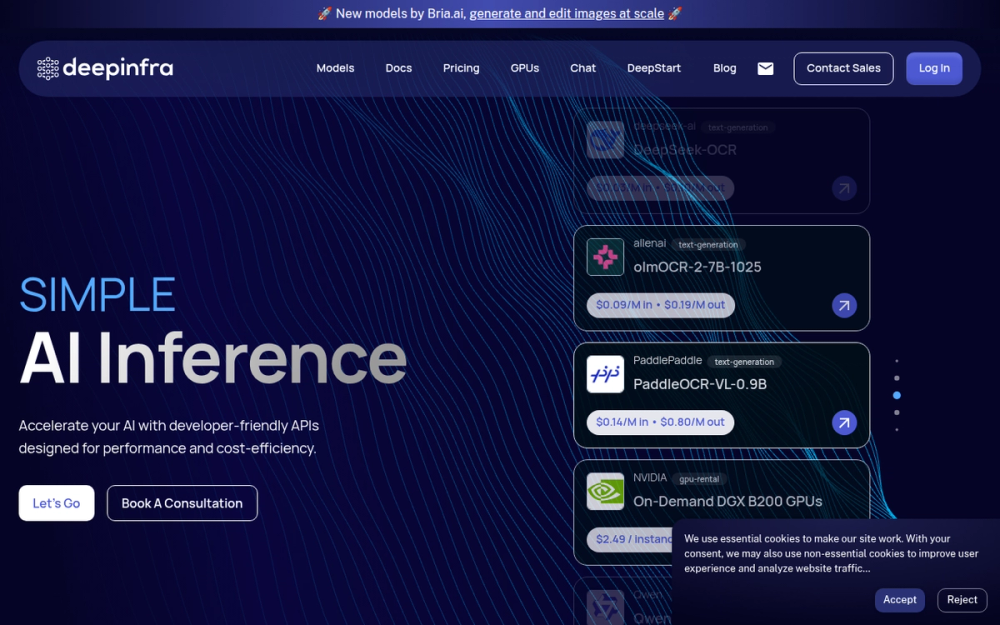

DeepInfra is a developer-friendly AI inference platform designed for performance and cost-efficiency. Running on cutting-edge infrastructure in secure US-based data centers, DeepInfra provides access to 100+ state-of-the-art open-source models with simple APIs and hands-on technical support.

Key Features

🚀 Fast & Reliable Infrastructure

- Optimized inference on proprietary hardware

- Secure US-based data centers

- Sub-millisecond time to first token

- Millions of tokens per second throughput

- Zero cold starts and high availability

💰 Unbeatable Pricing

Low pay-as-you-go pricing with no long-term contracts:

- DeepSeek-OCR: $0.03/M input, $0.10/M output

- Qwen3-Coder-30B: $0.07/M input, $0.26/M output

- GLM-4.6: $0.45/M input, $1.90/M output

- DeepSeek-V3.1: $0.27/M input, $1.00/M output

- No hidden fees, no surprises

🎯 Comprehensive Model Library

Text Generation (LLMs)

- DeepSeek V3.1, V3.2-Exp, OCR models

- Qwen3-Coder, Qwen3-Next series

- Claude 3.7 Sonnet, Claude 4 Opus

- Llama, Mistral, Gemini families

- Kimi K2, GLM-4.6, and more

Multimodal Capabilities

- Text-to-Image: Flux, Stable Diffusion models

- Speech Recognition: Automatic speech recognition

- Text-to-Speech: Voice synthesis

- Text-to-Video: Video generation

- Embeddings & Reranking: Semantic search support

- Image Classification: Zero-shot classification

🔒 Enterprise-Grade Security

- ✅ Zero Retention Policy: Your inputs, outputs, and user data stay private

- ✅ SOC 2 Certified: Industry-standard security controls

- ✅ ISO 27001 Certified: Information security management

- ✅ GDPR Compliant: EU data protection standards

- ✅ Best practices in privacy and security

Model Families

Access popular model families including:

- 🤖 anthropic/Claude

- 🧠 deepseek-ai/DeepSeek

- ⚡ black-forest-labs/Flux

- 🔷 google/Gemini

- 🦙 meta-llama/Llama

- 🌟 mistralai/Mistral

- 🎮 nvidia/Nemotron

- 📚 qwen/Qwen

Flexible Infrastructure Options

Serverless Inference

- Pay only for what you use

- Auto-scaling capabilities

- No infrastructure management

GPU Rental

- On-Demand DGX B200 GPUs

- Custom dedicated instances

- Starting from $2.49/instance-hour

Custom Hosting

- Host your own models on DeepInfra servers

- Low cost, high privacy

- Full control over deployments

Use Cases

- Code Generation: IDE assistants, code completion, debugging

- Conversational AI: Chatbots, virtual assistants

- Content Creation: Text, image, and video generation

- Document Processing: OCR, PDF parsing, text extraction

- Search & RAG: Embeddings, semantic search, reranking

- Multi-agent Systems: Complex reasoning and tool use

Trusted by Leading Companies

Used by Abacus.AI, Hugging Face, interface.ai, Salesforce, Requesty, and hundreds of startups and enterprises worldwide.

Real-time Performance Metrics

DeepInfra provides transparent live metrics:

- Tokens per second throughput

- Time to first token latency

- Requests per second capacity

- Computational power (exaFLOPS)

Why Choose DeepInfra

✨ Scale to trillions of tokens without breaking the bank

⚡ Inference tailored to you - optimize for cost, latency, or throughput

🔐 Zero retention & compliant - your data stays private

🏗️ Own hardware, own data centers - better performance for you